AI is celebrated for the innovation and efficiency it brings — and feared for its potential to upend the world as we know it. With the increased speed of AI development and adoption, companies are recognizing its lucrative value but also becoming more aware of its harmful dangers as they integrate the technology into their businesses.

New data from survey reports show that although leaders agree responsible AI (RAI) should be a major C-suite management issue, only some companies have the practices in place. Zdnet recently shared a report that received input from 500 C-suite business leaders, highlighting a significant gap in implementing the best procedures in RAI governance. Almost three-quarters (73%) of respondents said AI guidelines are indispensable. However, just 6% have established clear ethical guidelines for AI use, and 36% indicate they might implement them during the next 12 months.

Gen AI Market Predictions & Public interest

Executives in the boardroom are unanimous in their desire to adopt responsibly new AI, optimize existing systems with the latest technologies, and use insights to bring their vision to life safely and with responsible AI governance. To succeed in today’s rapidly changing business landscape, every company must have an AI strategy with trust at its core.

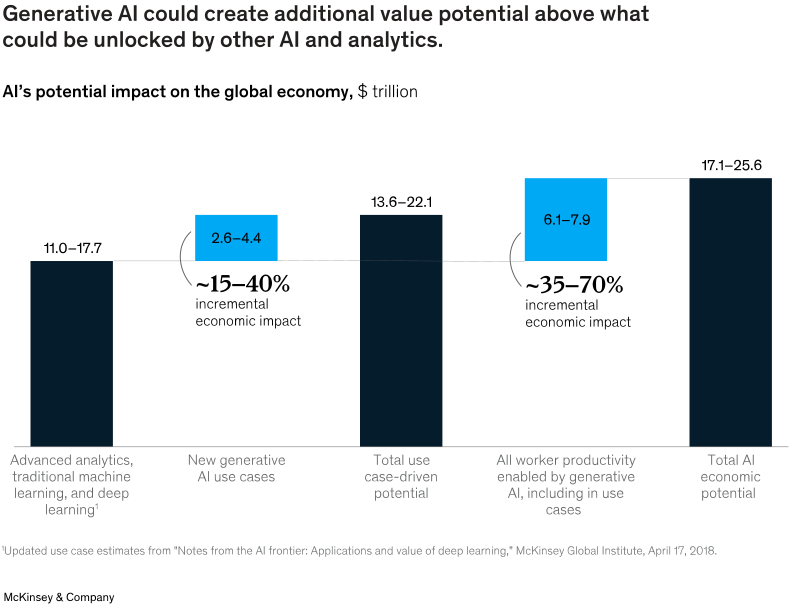

Market predictions anticipate remarkable growth in generative AI. McKinsey’s research highlights the significant potential of Generative AI, estimating that it could contribute between $2.6 trillion to $4.4 trillion annually across various industries, including banking, high-tech, and life sciences. Generative AI is projected to bring a seismic shift in how businesses can unlock value and drive growth.

The surge in popularity of Generative AI, which Andrew Ng called the new electricity, is evident from Chat GPT’s rapid rise, attracting 100 million users in just two months and redefining our interaction with technology. Foundation models such as GPT-4, Google’s PaLM 2, open-access BLOOM, or Anthropic’s Claude 2 – developed with unique Constitutional AI safety guardrails, transformers, and generative AI – are no longer niche topics.

Generative AI has captured the public imagination, generating new content and being an influential co-worker, co-pilot, financial analyst, or coding buddy. The foundation models powering generative AI applications are a step-change evolution.

Challenges with Implementing Responsible AI Principles Into Practice

The need for a responsible approach to AI solutions is growing rapidly, driven by increased concerns about implementing AI tools with ethical, competitive, reputational, and regulatory implications. As awareness and scrutiny of the risks associated with AI increase, building responsible technology has become a paramount concern in organizations across all sectors.

Some of the current challenges to developing ethical and responsible AI come down to a few things:

- Technological innovations are moving fast, and figuring out frameworks to balance gains with risks can be challenging. Most businesses face overwhelming complexity and the race to comprehend risks for our security, privacy, and safety with the speed of generative AI development.

- Leaders have heightened responsibilities in bridging the trust gaps. With the new wave of innovations. The pioneers in Responsible AI governance have been setting successful examples, providing benchmarks on industry best practices. Companies with robust RAI frameworks possess an advantage but must remain vigilant and adaptable, ensuring they evolve in sync with new developments and responsible use of AI.

- Ensuring proper guardrails and new best practices at the pace of rapid tech innovations geared to particular use cases. Leaders are faced with questions fueled by the powerful effects of Generative AI. Knowing and using best practices for successful implementations and risk mitigations is essential.

Holistic Approach – The game’s Rules have Changed with Generative AI

The game’s rules have changed with Generative AI, and leaders need a holistic approach to ground the future-proof strategy from the core. Given the pace of change, it needs to be set up correctly from the get-go to keep building it up and adding multiple decisions in the future. The power of generative AI underlying technology is not another tool in your business toolkit but a reinvention that will touch every aspect of our lives and businesses.

Accenture is predicting total enterprise reinvention. While there are already successful use cases and examples of generative AI revolutionizing our operations across industries, reinvention as the strategy sets new performance frontiers. Given the Generative AI capabilities that will continue to change what is possible, leaders today need more than a toolkit update to navigate this.

To bridge the trust gap of genAI, leaders should concentrate on three key principle areas to put responsible AI into practice

Leaders must prioritize a people-first approach and diverse leadership, a responsible approach to technology, and processes to establish a responsible governance of generative AI

1. Technology - Key Players in the Generative AI Ecosystem

Understand why is this new wave in AI innovations moving so fast and what is powering the foundation.

It all starts with the foundation. At the heart of any successful AI strategy lies a deep understanding of the generative AI landscape and the power of foundational models driving new generative AI capabilities. A clear understanding of how Generative AI works, how foundational models at its core power it, and how to mitigate risks and drive impacts will empower leaders to navigate the AI roadmap with confidence and foresight.

Be clear on your competitive advantage and how it ties to successfully managing value and risks.

When considering AI implementation, it is essential to begin with the business strategy questions. Leaders should evaluate their AI strategies based on the risks and opportunities related to their specific priorities. A key is to identify the layer in which you compete. Will you focus on building models or helping others build them? Alternatively, will you focus on the data front by providing data, managing it in the cloud, or bringing it in-house? Perhaps your competitive advantage is at the infrastructure level, providing hardware for AI technology. Or you double down on building AI applications. Whatever your choice, understanding your competitive advantage will be essential in implementing a responsible AI strategy.

2. People First Approach and Diverse Leadership in the Future of Work

Take People First Approach in the Future of Work

To develop a successful AI strategy, businesses should prioritize people over technology. IBM suggests that Generative AI is essentially about people – it involves reimagining work processes, providing adequate training and tools, and envisioning the future of work. To achieve this, companies must recognize the importance of skills that are on the rise, such as cognitive skills, creativity, analytical skills, systems thinking, technology skills, technological literacy, and AI. Leadership must understand the impact of tooling and skills required and place people at the center of AI strategy.

Ensure Diverse Leadership

With great power comes great responsibility. Every AI strategy must start with a question about the value we are creating. It’s also important to note who is involved in the decision-making process regarding AI. Do we have a diverse group of people with different perspectives and experiences? The systems thinking superpower, seeing the big picture, finding a common language between disciplines, tech, business, and industry leaders, simplifying concepts, and breaking them down to find common ground is essential for understanding, facilitating discussions, and achieving common goals. Furthermore, our leadership must possess a solid knowledge of the capabilities of AI and understand how to use Responsible AI correctly. This includes having expertise in Technology and a Responsibility Quotient.

3. Processes - The need for a Responsible AI approach

Focus on implementing Context-specific Responsible AI governance.

When navigating the complexities, it is essential to consider the unique context of the business. Are your use cases central or peripheral to your operations? Are you using the company’s proprietary or publicly available data? Do you need your secure instances with your data? Careful consideration must be given to the AI solutions that best align with various industries, use cases, geographical regions, and AI governance requirements. Understanding the trade-offs and tools for evaluating, using, customizing, or developing AI solutions is a key to committing to ethical AI practices.

Follow the best practices to assess and mitigate risks inherent to the new generative AI.

Leaders need a clear, responsible AI governance framework to assess and mitigate new potential risks with generative AI related to hallucinations, bias, privacy, and security. These choices aren’t made in isolation; they form part of a broader AI strategy and are interconnected with data governance frameworks, risk tolerance, and long-term growth plans that ensure a responsible AI approach.

Conclusion

Every business needs an AI strategy. Infusing AI with co-strategizing capabilities to implement responsibility in the core from the ground up brings future opportunities for organizations. The future of responsible AI is applying AI as your intelligent support system to strategize and co-create on each step of your trustworthy AI journey.

Are you looking for a partner? Here at TrustNet, we help guide enterprises and organizations as they develop and operationalize a custom-fit framework for your responsible AI journey.

Need Help Designing Responsible AI Strategy?

Trustnet’s responsible AI consultants work with organizations to accelerate the responsible AI journey. Get in touch to get to set up a consultation.